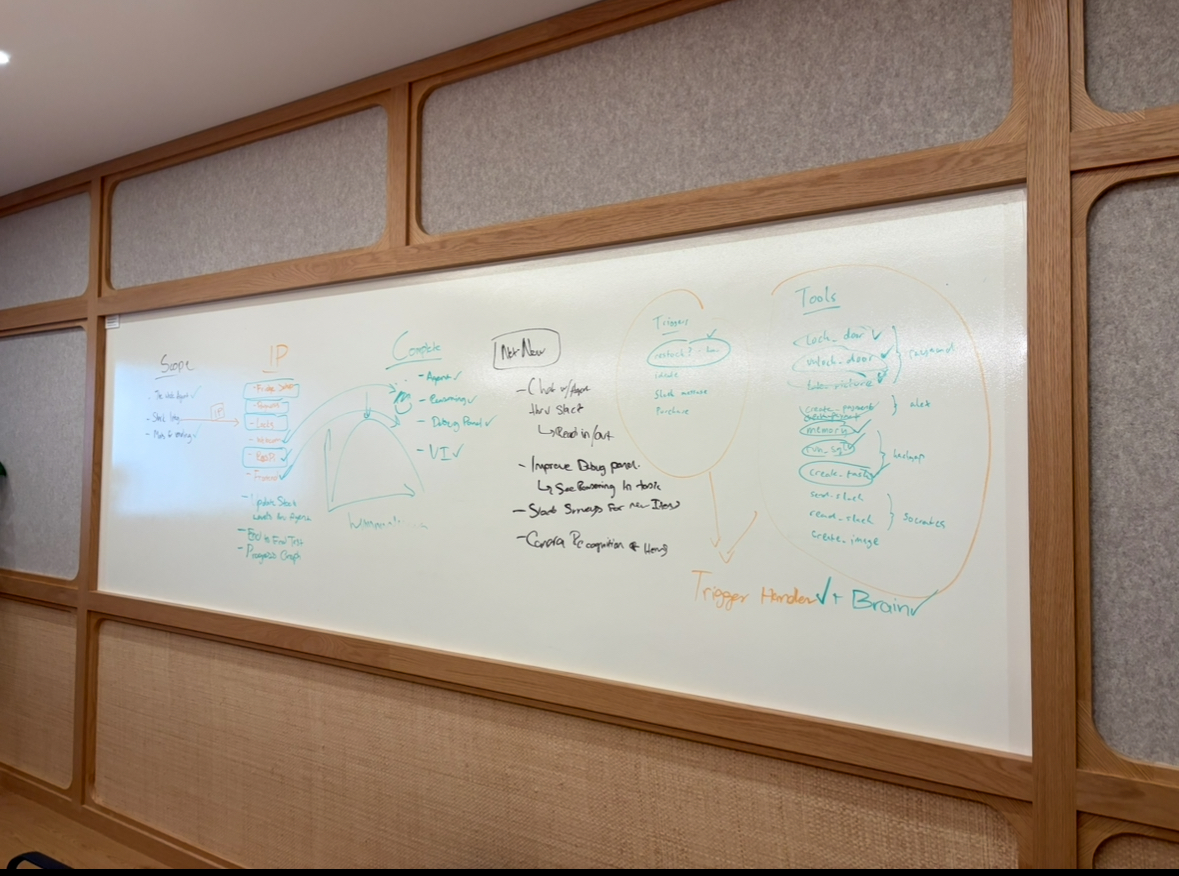

Overview

ShopkeepGPT is a fully autonomous, AI-powered vending machine (like Anthropic's Project Vend, but so much better). It's able to make decisions all on its own, from unlocking its fridge door and printing stickers to restocking inventory (deciding what to stock), alerting staff, or chatting with shoppers via real-time voice. It can haggle with you if you're savvy enough. It'll roast you too. Built end-to-end with Raspberry Pi hardware and a modular agent loop, the system blends AI planning with embedded control, payments, and analytics.

Highlights

- System architecture: Orchestrator wrapped around GPT-4o’s function-calling, exposing a typed toolbox of ~25 Python functions (Stripe, SQL, GPIO, image gen, memory, tasks, analytics).

- Hardware + AI fusion: Voice-controlled fridge using WebRTC → GPT-4o → Python GPIO unlock.

- AgentBrain + memory: Persistent context via Chroma DB; SQL-driven state; shrink analytics; safe, sandboxed real-world actions.

- Kiosk frontend: React 18 + TypeScript in kiosk mode with real-time voice and touch interaction.

- Stack: Python (FastAPI, SQLModel), Docker, React, WebRTC, OpenAI APIs, Raspberry Pi GPIO.

Architecture

Two-layer “software brain” enabling safe, extensible autonomy:

- AgentBrain (Python class) — “When should I think?”

- Trigger handlers such as:

handle_restock_trigger()

handle_shrink_trigger()

handle_slack_message_trigger()

handle_purchase_trigger()- Each handler builds a rich, contextual prompt (inventory stats, user behavior, tasks), then calls the orchestrator. Post-processing persists memories, emits Slack alerts, creates tasks, or controls hardware.

- Orchestrator (Function-calling loop) — “How do I think?”

- Wraps a GPT-4o call with:

- System prompt (policy, tone, safety)

- Tool schema (~25 JSON-defined Python functions)

- Task prompt crafted by AgentBrain

for step in range(3):

result = gpt4o.chat_completion(system, task, tools)

if result.tool_call:

tool_output = execute_tool(result.tool_call)

task.update_with(tool_output)

continue

return result.message # actions, messages, rationaleToolbox (exposed to GPT-4o)

- Inventory:

get_inventory,propose_restock - SQL:

run_sql,run_sql_template,get_db_schema - Memory:

store_memory,recall_memory(Chroma) - Tasks:

create_task,query_tasks,update_task - Analytics:

analyze_shrink - Payments:

create_stripe_payment_link,get_payment_status - Hardware:

open_door,close_door,fridge_snapshot,print_sticker - Creative:

generate_image,complete_image_generation

Every tool call is typed, validated, and sandboxed. The LLM never touches raw APIs — it only requests actions via these controlled interfaces.

Real-World Flow: Restock Trigger

- Cron fires

AgentBrain.handle_restock_trigger(). - Orchestrator hands the task + tool schema to GPT-4o.

- GPT calls

run_sql_template('low_stock_alert')→ returns SKUs. - GPT calls

propose_restock([sku_ids])→ returns quantities. - Final assistant message includes rationale and next steps.

- AgentBrain stores a memory, creates a task, and posts a Slack summary (e.g., “SKU-3 is low. Reordering 12 units.”).

Frontend & Infra

- React 18 + TypeScript kiosk app (voice + touch), WebRTC chat, barcode scanning, Stripe checkout, camera preview.

- Backend: Python 3.11 + FastAPI; SQLite/SQLModel; Chroma vector memory.

- Edge deployment: Raspberry Pi OS; GPIO, fswebcam, Bluetooth ESC/P printer; Docker Compose.

Why it's cool

- It's got real agent architecture with safe tool dispatch, typed schemas, and memory.

- Cross-domain execution: AI planning + embedded systems + SQL + payments + real-time comms.

- Responsible AI in practice: Guarded tool calls, validated inputs, sandboxed effects.